Distributed Systems Practice Notes

Big Data - Analyze Big Data with Hadoop Lab

November 08, 2018

This lab shows how to use Amazon EMR Hadoop to analyze a provided CloudFront log file, the provided script creates a Hive table, parses the log file using Regular Expression Serializer/Deserializer, writes parsed result to table, submits a HiveQL query to retrieve the total requests per OS for a given time frame, and writes the query result to S3 bucket.

Learning Outcomes

- How to set up an EMR cluster

- How to use Hive Script to process data

Official Links

QwikLab: Analyze Big Data with Hadoop

Operations

0: Create an S3 bucket for Analysis Output

- Set bucket name as hadoop-1234

- Leave the rest options as default

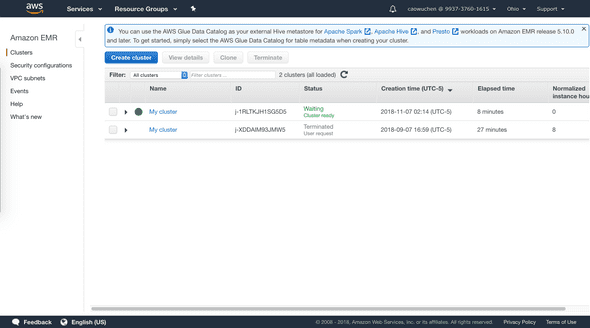

1: Launch an EMR cluster

- Find EMR console

- Click Create cluster button

-

In General Configuration section

- Cluster name: My cluster

- S3 folder: hadoop-1234

-

In Hardware configuration section

- Instance type: m4.large

- Number of instances: 2

-

In Security and access section:

- EC2 key pair: Proceed without an EC2 key pair

- Permissions: Custom

- EMR role: EMR_DefaultRole

- EC2 instance profile: EMREC2DefaultRole

- Create cluster

2: Process Log Data by Running a Hive Script

- Wait until cluster is showing Waiting status

-

Create a step

- Step type: Hive program

- Name: Process logs

- Script S3 location: s3://us-west-2.elasticmapreduce.samples/cloudfront/code/Hive_CloudFront.q

- Input S3 location: s3://us-west-2.elasticmapreduce.samples

- Output S3 location: hadoop-1234

- Arguments: -hiveconf hive.support.sql11.reserved.keywords=false

- Add step, it takes about 1 minute to run the script

3: View the Results

- Download the 000000_0 file in os_requests folder of S3 bucket

- Open the file and view result

4: Clean up

- Terminate My cluster in EMR console

Written by Warren who studies distributed systems at George Washington University. You might wanna follow him on Github